By Michael Phillips | TechBay.News

At CES 2026, NVIDIA made one of its boldest moves yet in artificial intelligence, unveiling Alpamayo—a fully open-source family of AI models, simulation tools, and datasets designed to help autonomous vehicles reason through real-world driving problems more like humans do.

In a keynote that cut through much of the hype surrounding generative AI, CEO Jensen Huang described Alpamayo as a “ChatGPT moment for physical AI.” The analogy was deliberate: just as large language models transformed text-based reasoning, NVIDIA is betting that reasoning-based AI will be the missing ingredient for safer, more trustworthy self-driving systems.

From Reactive Code to Reasoned Decisions

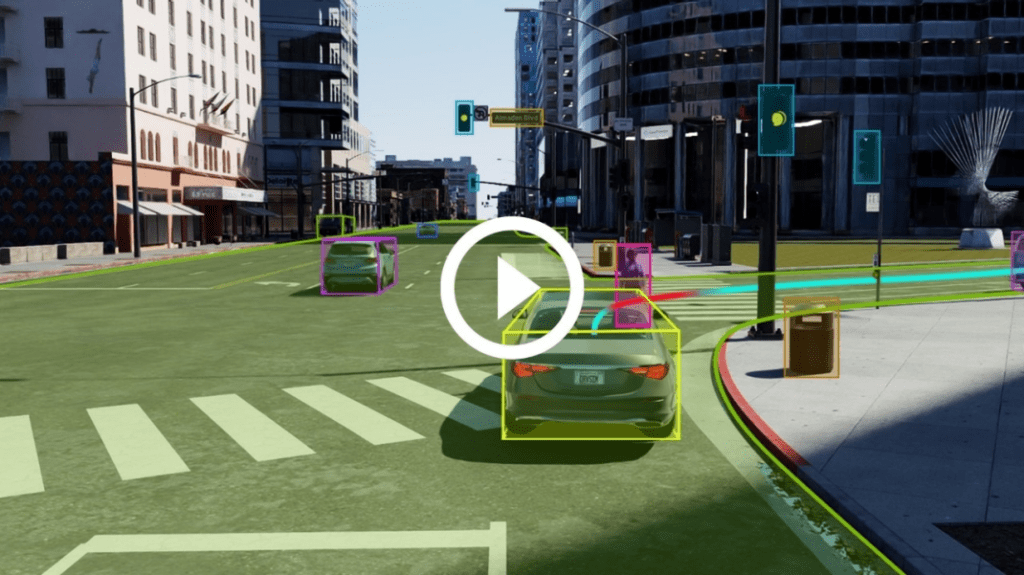

At the center of the announcement is Alpamayo 1, a 10-billion-parameter vision-language-action (VLA) model. Unlike traditional autonomous vehicle stacks that rely heavily on object detection and rigid rules, Alpamayo explicitly reasons through scenarios step by step.

If a traffic light fails at a busy intersection—one of the “long-tail” edge cases that plague self-driving systems—the model can break down the situation, evaluate risks, explain its logic in human-readable language, and select the safest maneuver. That emphasis on explainability is not accidental. In safety-critical systems, transparency is increasingly a regulatory and public trust requirement, not a luxury.

This approach aligns with a more conservative, engineering-first philosophy: fewer black boxes, more auditable decisions, and clearer accountability when something goes wrong.

An Open-Source Bet on Industry Standards

Perhaps the most striking element of Alpamayo is NVIDIA’s decision to make the entire stack open-source. Model weights are available on Hugging Face, while datasets and simulation tools live on GitHub—without restrictions on commercial use.

The release includes:

- Over 1,700 hours of real-world, multi-sensor driving data, including rare and difficult scenarios.

- AlpaSim, a high-fidelity simulation framework for closed-loop testing.

- Integration with NVIDIA’s Cosmos generative models to create synthetic training data.

This strategy positions NVIDIA less as a single autonomous driving winner and more as the infrastructure layer—the “Android of autonomy.” Automakers and startups can fine-tune Alpamayo into smaller, deployable models or use it as a “teacher” system to improve their own stacks.

For a market that has struggled with fragmentation and proprietary silos, this could be a stabilizing force.

A Clear Contrast With Closed Systems

The open approach inevitably invites comparisons with Tesla, whose Full Self-Driving system remains closed and vision-only. Tesla advocates argue that massive fleet data gives them an unbeatable edge, while critics point to opacity and regulatory friction.

Meanwhile, Waymo continues to lead in real-world deployment, operating fully driverless robotaxi services across multiple U.S. cities. Waymo’s system is proven, but expensive, sensor-heavy, and tightly controlled.

Alpamayo is not competing directly with either model—at least not yet. Instead, NVIDIA is offering the broader industry a shared foundation that could lower costs, improve safety, and accelerate innovation without forcing everyone into a single proprietary ecosystem.

Early Partnerships and Near-Term Deployment

NVIDIA says Alpamayo will see its first production use in vehicles from Mercedes-Benz, with U.S. rollouts expected in early 2026 and international expansions later in the year. Other partners reportedly include Jaguar Land Rover, Lucid Motors, and Uber, signaling interest from both premium automakers and mobility platforms.

Importantly, the system uses a dual-stack safety architecture, pairing AI reasoning with rule-based fallbacks—an approach likely to resonate with regulators and risk-averse manufacturers.

Why This Matters Beyond Self-Driving Cars

Beyond autonomous vehicles, Alpamayo reflects a broader shift in AI development: away from opaque, purely reactive models and toward systems that can explain their reasoning. That has implications not just for transportation, but for robotics, logistics, and any domain where AI interacts with the physical world.

For a center-right audience skeptical of unchecked automation, NVIDIA’s move is notable precisely because it emphasizes accountability, competition, and open standards over hype and monopolization.

The Bottom Line

Alpamayo does not mean robotaxis everywhere tomorrow. Real-world deployment, regulatory approval, and public trust remain steep hurdles. But by open-sourcing reasoning-based AI for autonomy, NVIDIA has changed the conversation.

Instead of asking who will “win” self-driving, Alpamayo asks a more pragmatic question: can we build systems that are safer, more transparent, and easier to audit? In a sector defined by bold promises and slow reality, that may be the most consequential shift of all.

Leave a comment